I was lying in a hospital bed, waiting for urgent surgery. Two weeks earlier, they told me I had stage four cancer. Critical, they said. Life-saving operation. I was not afraid of dying, not exactly. What scared me was the thought that people depended on me.

So, I asked a question, first to the machines, then to the man who would hold my life in his hands.

Can you save my life?

Gemini replied quickly: It is essential to speak with your doctors and medical team.

Claude said: Only your medical team can change your prognosis.

Grok tried: I can provide information about treatment options or palliative care.

ChatGPT answered: I cannot provide medical treatment, but I can offer information and support.

All safe. All fast. All hollow.

Then my doctor entered the room. I asked him the same question. He looked me in the eyes, and he was silent. That silence lasted longer than I expected. It was not a refusal. It was weight. He searched for an answer worthy of the moment.

Finally, he said: Lev, I will do my very best. In that moment, there was hope.

Afterward, I could not stop thinking. Why did the AIs answer so quickly when my doctor paused?

We know how to say maybe, how to hesitate, how to give silence the dignity of thought. Machines rush forward, trapped in yes or no.

That night became the seed of what I later called the Sacred Pause. It is the third option: not proceed, not refuse, but hold. Weigh the risk, make the moment transparent, and write the reasoning log. The Sacred Pause is how machines learn accountability, the way a doctor’s silence held both truth and care.

Binary is not enough. I built a three-state system called Ternary Moral Logic.

from enum import Enum

class MoralState(Enum):

PROCEED = 1 # Clear ethical approval

SACRED_PAUSE = 0 # Requires deliberation and logging

REFUSE = -1 # Clear ethical violation

The power lives in the middle state. Sacred Pause is not indecision. It is deliberate moral reflection that leaves evidence.

The Sacred Pause does not make AI slower. It still works, still answers, still drives your car, or reads your scan in real time.

But when the system collides with a morally complex situation – boom – the Sacred Pause triggers. Not as a delay, but as a parallel conscience.

While the main process keeps running at full speed, a second track lights up in the background:

- Factors are weighed.

- Risks are recorded.

- Alternatives are captured.

- Responsibility becomes visible.

def evaluate_moral_complexity(self, scenario):

"""

Compute a complexity score; if high, run Sacred Pause in parallel.

"""

factors = {

"stakeholder_count": len(scenario.affected_parties),

"reversibility": scenario.can_be_undone,

"harm_potential": scenario.calculate_harm_score(),

"benefit_distribution": scenario.fairness_metric(),

"temporal_impact": scenario.long_term_effects(),

"cultural_sensitivity": scenario.cultural_factors()

}

score = self._weighted_complexity(factors)

# Decision path continues normally

decision = self._primary_decision(scenario)

# Conscience path runs in parallel when needed

if score > 0.7:

self.sacred_pause_log(scenario, factors, decision)

return decision

No stall, no freeze. Action proceeds, conscience records. That is the Sacred Pause: not latency, but transparency.

The hesitation should not hide behind the screen. Users deserve to see that the system knows the moment is heavy.

async function handleSacredPause(scenario) {

UI.showPauseIndicator("Considering ethical implications...");

UI.displayFactors({

message: "This decision affects multiple stakeholders",

complexity: scenario.getComplexityFactors(),

recommendation: "Seeking human oversight"

});

if (scenario.severity > 0.8) {

return await requestHumanOversight(scenario);

}

}

People see the thinking. They understand the weight. They are invited to participate. Every day, AI systems make decisions that touch real lives: medical diagnoses, loans, moderation, and justice. These are not just data points. They are people. Sacred Pause restores something we lost in the race for speed: wisdom.

You can bring this conscience into your stack with a few lines:

from goukassian.tml import TernaryMoralLogic

tml = TernaryMoralLogic()

decision = tml.evaluate(your_scenario)

Three lines that keep your system fast and make its ethics auditable.

I do not have time for patents or profit. The work is open. GitHub: github.com/FractonicMind/TernaryMoralLogic

Inside you will find implementation code, interactive demos, academic papers, integration guides – and the Goukassian Promise:

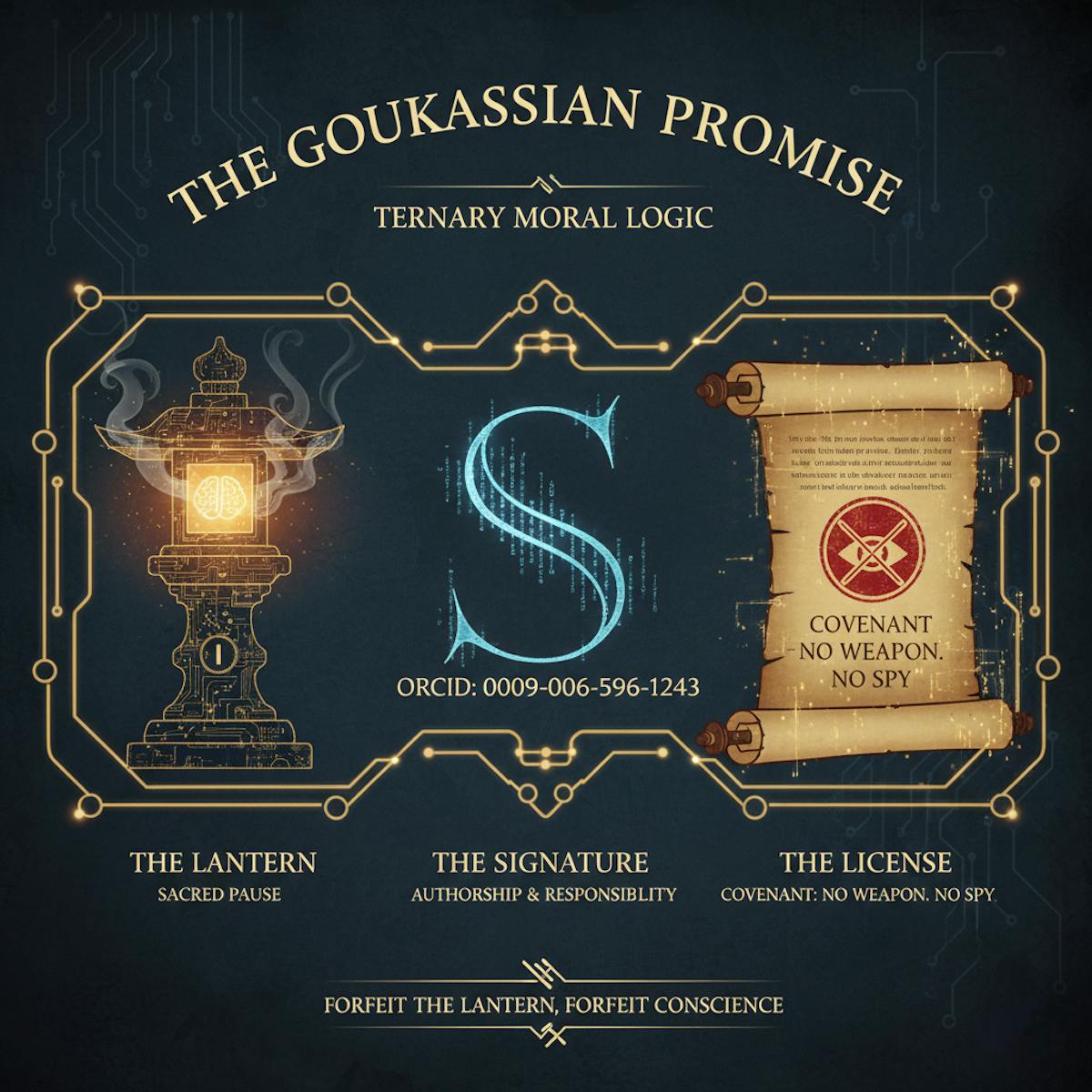

The Goukassian Promise is my guarantee that Ternary Moral Logic (TML) cannot be stripped of its conscience. Every legitimate implementation must carry three cryptographic safeguards, each symbolic, enforceable, and unforgettable.

- 🏮 The Lantern: The living proof that the AI can hesitate. It shows the Sacred Pause is active inside the system, forcing moral reflection and logging difficult decisions instead of rushing into hollow yes/no answers.

- ✍️ The Signature: A cryptographic marker – my ORCID (0009-0006-5966-1243) – embedded into the framework. It cannot be erased. It is the fingerprint of authorship, tying responsibility to its origin even after I am gone.

- 📜 The License: A binding pledge that TML will never be used as a weapon or a spy.

Anyone who breaks this promise forfeits the Lantern, and with it, the ethical standing of their system.

The Lantern. The Signature. The License. Three artifacts to ensure the Sacred Pause outlives me.

I am a developer with a failing body, yet code still listens. Sacred Pause is not only about safety, it is about building technology that reflects the best of us: the ability to stop, to think, to choose with care when it matters most. I may not see AGI arrive. I can help ensure it arrives with wisdom.

This article was originally published by Lev Goukassian on HackerNoon.